By Henry Innis, Mutinex Co-Founder and CEO

Brand Equity was a big, hairy problem (what Mutinex Data Science loves)

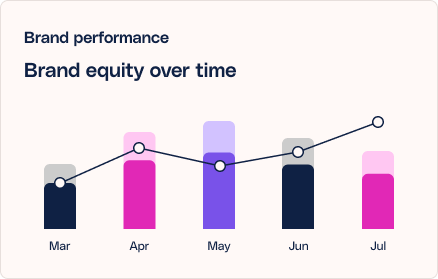

Recently Mutinex released a new feature called “Brand Equity”. Brand has been a notoriously hard thing to measure over the years and many people were surprised we were able to pull it off. In this blog post I’m going to (kind of) open-source Brand Equity. I’m going to explain what we did to model Brand Equity and how we as a team were able to pull it off. The innovation was fundamentally one that revolved around both model development and signal innovation. For us, it’s an incredibly exciting project and one we wanted to share how we’d done.

Importantly we believe sharing these approaches makes the industry better as a whole. We’re excited to share some of our approaches here. Sure, our competitors are likely to copy us, but we think imitation is flattery. Plus we believe the more brand marketers get access to this important feature (even in competitor copycats), the better off marketing will be.

The fundamental issue is signal

When dealing with Brand data, the major issue is signal. Most Brand Data doesn’t move much. Awareness might move a couple of % points for example for a major brand or business in a year. Whilst we know they matter, decoding their meaning into tangible numbers has been a challenge.

There have been a number of attempts to infer this including advanced signal engineering with more real-time signals to solve this

- Share of Search

- Branded Search terms

- Social Listening

The challenge with these signals is they don’t cleanly give a view of brand reputation. For example brands that are heavily searched tend to be incumbents, but don’t give a leading signal of future demand. Social listening often spikes when reputation is tanking, not the other way around. It’s a huge problem. The signals aren’t strong, and there’s no uniform proxy for anyone to take.

Engineering signals is key to a model. From a first principles perspective, we need something to reflect what we actually know in reality to be true. The reason for this is simple – too many models are built to overfit. A really good example of this is Branded Search

Branded search is a promising but imperfect signal

Branded search has been one attempt to generate better “signal” around the brand’s health as a top of mind signal. The challenge is branded search also maintains a heap of collinearity around market share. In essence, your share of search reflects your share of market. These have close proxies to brand and often a deterioration in branded search will be a great lead indicator of something happening to your future market share. But it isn’t the strong signal to brand and preference we’re looking for.

Sure, it certainly contains lots of strong signals of intent. Let’s take a company in crisis though. If a company is in crisis, it’s likely to be searched a lot more. This isn’t a signal that the brand is going to generate future and more demand. It’s a signal that the brand is likely to decline. So engineering the signals around search is notoriously challenging.

First principles: what are we trying to reflect?

Note: my understanding of this topic derives from reading “How Brands Grow” by Byron Sharp, an excellent book I’d recommend to anyone who wants to understand the science of marketing rather than the fiction of it. Our data science team also reviewed a number of academic papers along the way.

From a first principles perspective we’re trying to reflect how people remember a brand in buying moments. A strong brand comes to mind in the right ways when someone goes to buy – it’s that simple. Memory structures and associations work to give the consumer memory, and in doing so they come to them at key buying moments. That’s the simple explanation of “how” a brand works.

This explains some of the challenges with the signals that we need. We’re not actually looking for one signal, but a number of signals. We need a signal that reflects whether the brand comes top of mind for consumers and a signal that reflects the different preferences or associations that consumers might have if it comes top of mind.

Collecting the right signals

Within the Brand Tracking space there are a number of measures that do this. We’re looking for top of mind. A good proxy for which signal is best sits under the Awareness category. And then we’re looking for signals of preference – a standardised one being better than a customised survey question for reasons of scale.

Standard prompted Awareness tends to do poorly in this context. It lacks variation, which models need to test an understanding of highs and lows. Ask most people about a massive brand and they’ll generally be able to name it or answer it – negating the “Top of Mind” factor we’re looking to represent (which should better reflect the buying moment). Not only is the signal wrong, but because it lacks variation, it tends to also be virtually impossible to model..

Building a strong model

Now that we have more promising signals, we need a model to handle it. What we’re looking to do is firstly give the model a baseline signal (in this case – unprompted awareness) that it can then “boost” with other signals.

Given we are working within a “weak signal” environment to generate a strong signal, we selected a methodology that combines weak signals into strong signals from the Gradient Boosting family. Modifying that code and adapting it yielded significant breakthroughs for us to link Brand.

How we did it

We cycled through around 20 metrics individually at first to identify which metrics consistently began to find a fit. At first, the model was relatively poor in it’s understanding. Turns out metrics don’t predict consistently in Brand Equity.

The underlying reason is what Brand Equity actually is. Brand Equity is a function of perception and awareness. In essence, you have a basket of metrics that reflect this as one consistent signal rather than a single signal.

We had to engineer that baseline into the modelling methodology above to reflect a more accurate reality. What we didn’t do was integrate it into the main model. The reason why was the collection of the data and the minor degradation of the model accuracy would have made it challenging to do so.

Benefits of a Brand Equity model

Okay, so you must be thinking what are the benefits of a Brand Equity model? It basically comes down to two big questions.

Going ‘dark’ (which is usually bad): Businesses often ask “what is the cost of going dark”. Brand equity provides a data point that, whilst not exhaustive, gives some indicators of these benefits.

Value of brand (which is usually high): Businesses also ask what is the value of moving % points up and down in brand tracking studies from an outcome perspective. This technology and type of modelling gives them that insight quickly.

Big limitations to measuring brand equity

There are some big limitations to Brand Equity modelling that are worth considering if you want to be in this space. They are

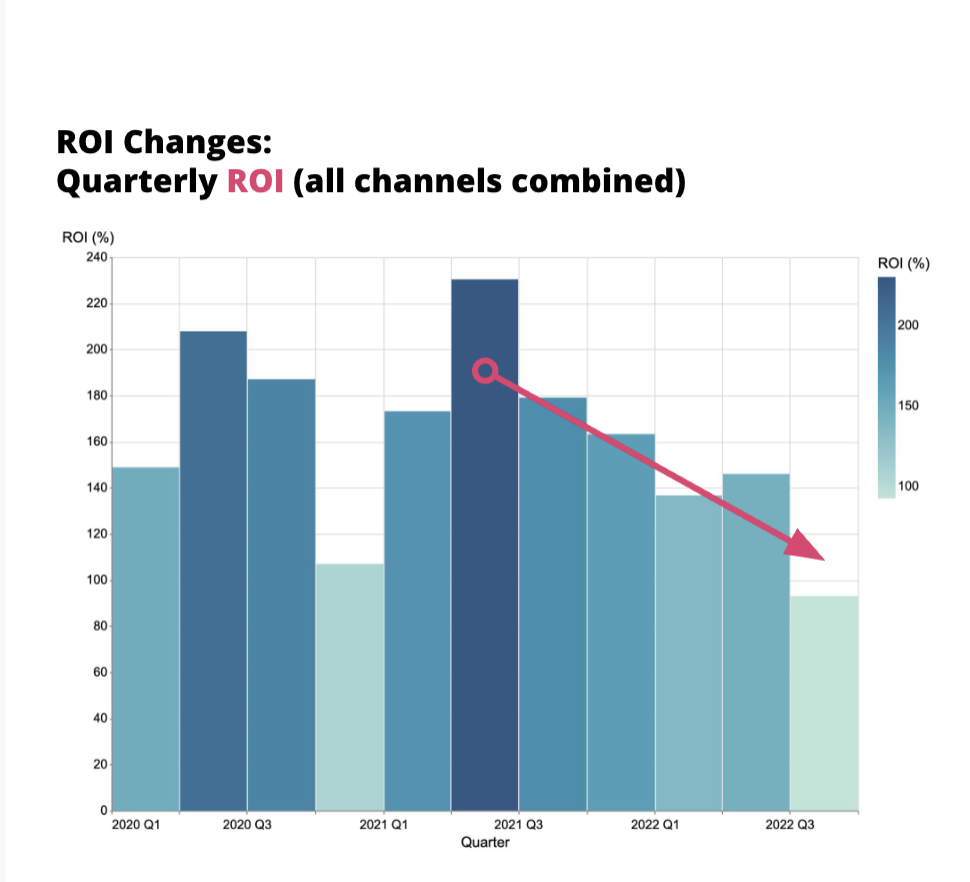

- Model integration. We didn’t integrate into the main model due to challenges in linking core marketing activity and investment to specific, time-period brand metrics uplifts. This in itself posed a challenge for building true, integrated “ROI” models by channel.

- Model principles. The model relies on a strong Bayesian belief that the brand generates the overall baseline sales trend. Whilst we believe this to be true, this is a belief inserted into the model, and thus the model is trying to estimate our belief rather than learning purely from the data.

- Channel breakdowns. We were unable to breakdown accurately by channel. This is less of an issue with the model itself, and more to do with the challenges associated with collection of Brand data.

Promising future routes

Improving the signal further means either improving the collection method or cost associated with it. One promising avenue we’ve seen is Tracksuit, a new startup aiming to democratise market research in the same way Mutinex is trying to democratise MMM. We believe speeding up and modernising both the collection and presentation of data will have huge impact in the way it can be converted to signal within Foundational Model spaces.

The second area is signal engineering. Whilst the core Brand Equity data is really strong, there may be additional standardised signals we can use to further augment this area of research. We’re looking into this space in the future, but are conscious that there is so much collinearity in this space it may be challenging to work on.

The third route is linking marketing activity to brand metrics uplift in specific time periods. We’re confident this can be done, particularly if signal collection improves over time. We’re looking into this space with some significant and serious interest as we start to build “long-short” models; though causality remains a challenge in linking brand investment from years ago to present day. We’re hesitant to do anything that would undermine causality tests within our model.

Final notes…

We’re super pleased to share this to the community. Hopefully more transparency around how we do things leads to a levelling up in how marketers work with brand metrics and link them to P&L activity.

We’re happy to show our competitors, who undoubtedly are reading this, some of our work if it’s useful. After all we’re all on a mission to improve the ROI and quality of marketing – what better way than to work together to solve these big problems.

So what can you do as a marketer today? Start collecting brand equity metrics – and collect them at least monthly!